Yes, it’s true. I’m not an engineer. So, I’ll do my best on this post, as Light Field Capture is a critically important piece of the Mediated Reality ecosystem, and, therefore, to the future of Mediated Reality itself. Here’s my effort. I look forward to comments on this description in my feed. We are all learning all the time!

What is a light field and how is it captured?

Light field is a term indicating the motion of light in all possible directions within a given three-dimensional space. As such, capturing a light field with any reliable accuracy requires much more than a standard camera can offer. For the most part, there are two ways of going about capturing a three-dimensional space: 360° cameras and Motion Capture.

Mocap captures a three-dimensional space from the outside, surrounding its target with hundreds of cameras, each at different angles so as to give as many views of the same scenario as possible. The footage from each individual camera is then stitched together until a three-dimensional image is produced. The more cameras, the higher the accuracy of the representation.

360° cameras, on the other hand, stand right in the middle of the action, viewing the scene from the inside out. They are similarly constructed from an array of cameras pointing in as many different directions as possible. And again, more cameras equal higher resolution.

To be clear, not all 360° cameras are light field cameras. Some simply record footage in a variety of directions. The difference is in the level of resolution and sheer number of cameras, or perspectives, being used to construct the final light field.

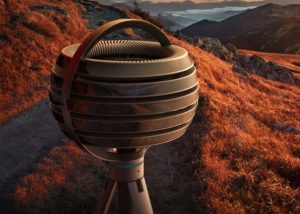

Example of a light field camera

360° cameras enjoy an expanding market as VR experiences become increasingly prominent. The most common example is a stationary apparatus with a large array of cameras, but there are head-mounted cameras in existence as well, meant to capture the experience of a person moving through a light field.

The Lytro Immerge is a camera of the first sort, stationary, with hundreds of small cameras pointing in as many different directions as possible, each one processing one perspective and then internally editing all the footage into a coherent three-dimensional light field.

Once the field is captured, the footage allows for parallax, as well as realistic maneuverability in all 6 degrees of freedom. Those being:

- tilting the head up and down

- shaking the head from side to side

- rolling the head from side to side

- moving straight up and down

- swaying side to side and

- moving backwards and forwards.

This allows for maneuverability within the parameters of the camera’s body, which in the case of the Lytro Immerge, is about one square meter.

All of this adds up to a more realistic interaction with a virtual space, one that looks different at every angle and allows you to explore its contours as if you were actually there. These tools also allow the virtual space to map on to reality with perfect accuracy. With the footage taken by the Lytro Immerge, it is possible to seamlessly and instantaneously blend CG into live footage, placing the Real directly beside the Unreal. (Little developer joke to end this post!)